Want to tap into the wealth of information hidden behind login-protected websites? The ability to scrape website with login unlocks a whole new world of data that can boost your business intelligence. Whether you want to track competitors, automate research, or streamline data-driven decisions, website scraping is essential – but it gets trickier when logins are involved.

Fear not! This guide will demystify the process of scraping data from websites required login. We’ll cover the essential strategies and tools you need to successfully navigate login barriers and collect the valuable data you’re after.

Your comfort level with code isn’t a barrier. We’ll explore everything from user-friendly, no-code solutions to powerful Python-based approaches for scraping websites with login. By the end, you’ll be equipped with the right methods to suit your project, allowing you to harness the power of data from even protected websites.

What Should You Check Before Scraping Data?

Before we dive into how to scrape website with login, there are some important rules of the road. Websites have Terms of Service (TOS), a legal document that outlines how you’re allowed to use them. Some websites may specifically ban scraping, so always check the TOS before you start extracting data.

Also, look for a file called robots.txt. This file lets websites tell web crawlers (including scrapers) what parts of the site they are and aren’t allowed to access. Respecting robots.txt is good web citizenship and helps avoid accidentally breaking a site.

Beyond the legal stuff, scraping data responsibly matters. Be mindful of things like copyright – don’t re-use information without permission. And unless a website clearly makes it public, avoid scraping personal data – things like names or emails. Scraping ethically keeps you on the right side of the law and shows respect for the data you’re collecting.

How to Scrape Data from a Website That Requires Login?

There are the primary ways to scrape website with login:

- No-Code Tools: No-code scraping software makes it easier than ever to collect data from login-protected websites. These tools use visual interfaces, like drag-and-drop functionality, to let you design how the scraper works without having to write a single line of code.

- Python Power: If you don’t struggle with coding, Python is a great choice to help you crawl websites with credentials. Libraries like BeautifulSoup and Selenium work closely with Python to handle complex tasks like filling out login forms and navigating content. Although it requires some programming skills, Python offers a great deal of flexibility and allows you to customize every aspect of the data collection process to your needs.

- Cookies and Sessions: Websites often use cookies and sessions to maintain user login status. Understanding these mechanisms can be helpful for some scraping scenarios. Techniques like cookie scraping and session hijacking (not recommended for ethical scraping) involve extracting cookies or manipulating sessions to bypass login forms. However, these methods require advanced technical knowledge and can be unreliable as websites often update their authentication protocols.

- Power BI (for specific use cases): While not a dedicated scraping tool, Microsoft Power BI offers some data import functionalities from web sources. Power BI Desktop allows users to connect to specific websites and import data through web APIs (Application Programming Interfaces) if available. However, this method is limited by the availability of APIs and doesn’t involve scraping website content directly.

How to Scrape Website with Login by No-code Software?

No-code software offers a user-friendly alternative to programming languages like Python for scraping data from login-protected websites. These tools excel in their visual interfaces and pre-built functionalities, making them accessible even for those without coding experience. Here’s a breakdown of how to scrape a website with login using no-code software:

- Choose a No-code Scraping Tool: Several popular no-code scraping tools cater to login-protected websites. Here are some widely used options: RPA Cloud, Import.io, Octoparse, Scrapy with Mantis and more.

- Sign Up and Create a Project: Once you’ve chosen your preferred no-code tool, sign up for an account and create a new project. Most tools will offer a free trial or basic plan to get you started.

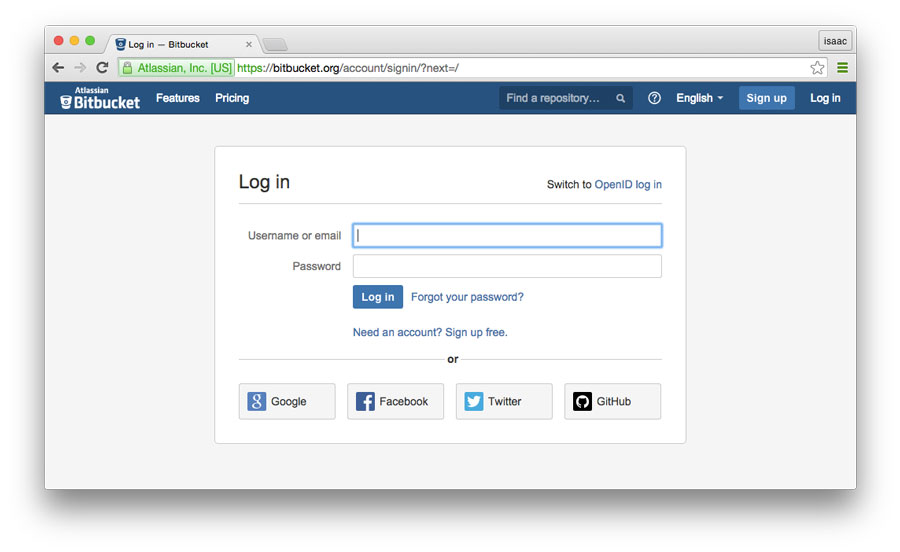

- Record Login Process (Optional): Depending on the specific tool, you might be able to record your interaction with the website’s login form. This involves navigating to the login page and entering your credentials.

- Navigate to Target URL: Specify the website URL containing the data you want to extract. The no-code tool will typically allow you to navigate to this URL within the scraping workflow.

- Identify Data Points: Use the visual interface of the no-code tool to pinpoint the specific data elements you want to extract. This might involve clicking on the desired data points on the website’s preview within the tool. The tool will identify the underlying HTML code associated with that data for extraction.

- Filter and Refine (Optional): Some no-code tools offer filtering and refining options within the scraping workflow. You can filter the extracted data based on specific criteria or manipulate it using basic functions provided by the tool.

- Schedule and Run (Optional): Many no-code tools allow you to schedule automatic scraping tasks. You can set the frequency at which the tool retrieves data from the website, ensuring you have up-to-date information.

- Export Data: Once you’re happy with the scraping configuration, run the workflow to extract the data. No-code tools typically allow you to export the data in various formats like CSV or JSON for further analysis or use in other applications.

Important Notes:

- Free vs Paid Plans: Most no-code scraping tools offer free plans with limitations, such as data extraction quotas or limited features. Upgrading to a paid plan might be necessary for larger-scale scraping projects.

- Website Complexity: While user-friendly, no-code tools might struggle with highly complex websites or those with extensive use of JavaScript.

How to Scrape Website with Login by Python?

Here’s a basic outline of scraping a login-protected website with Python using BeautifulSoup and Selenium:

Import Libraries: Start by importing necessary libraries like requests, BeautifulSoup, and selenium from selenium.webdriver depending on your chosen web browser driver (e.g., Chrome, Firefox).

Set Up WebDriver: Specify the path to your web browser driver and create a new instance of the WebDriver class.

Navigate to Login Page: Use the get() method of the WebDriver object to navigate to the website’s login page.

Identify Login Form Elements: Use Beautiful Soup to parse the login page HTML and identify the elements for username and password input fields (often using IDs or class names).

Populate Login Form: Use the send_keys() method of the WebDriver object to enter your login credentials into the identified username and password fields.

Submit Login Form: Locate the submit button element and use the click() method of the WebDriver object to simulate a click, submitting the login form.

Wait for Login Confirmation: Websites often redirect upon successful login. Use explicit waits from the Selenium library (e.g., WebDriverWait and ExpectedConditions) to ensure the script waits for the page to load after login before proceeding with data scraping.

Extract Data: Once logged in, utilize Beautiful Soup again to parse the website’s HTML and extract the desired data. You can target specific elements using tags, IDs, or class names.

Process and Store Data: Clean and organize the extracted data as needed. You can store it in various formats like CSV (comma-separated values) or JSON (JavaScript Object Notation) for further analysis.

Close WebDriver: Once scraping is complete, it’s good practice to close the WebDriver instance using the quit() method.

Important Note: Remember to replace placeholders like “username” and “password” with your actual credentials. Sharing website login credentials in code snippets is not recommended due to security concerns.

How to Scrape Website with Login by Power BI?

Power BI is not designed to scrape website with login directly. However, if a website provides a public Web API for accessing its data, you can leverage Power BI to connect and import the data:

- Identify Web API: Check the website’s documentation or online resources to see if they offer a public Web API for data access.

- Connect to Web API: Within Power BI Desktop, navigate to the “Get Data” section and choose “Web” as the data source. You’ll then be able to provide the Web API URL and authentication details (if required by the API).

- Import Data: Once connected to the Web API, Power BI will guide you through the process of selecting the specific data sets you want to import. You can then transform and analyze the data within Power BI for your visualization and reporting needs.

Important Note: Using public Web APIs is the preferred method within Power BI for accessing website data. It eliminates the need for scraping website content directly and often provides a structured and well-documented way to retrieve the information you need.

However, keep in mind that not all websites offer public Web APIs, and their availability depends on the specific website.

How to Scrape Website with Login with Cookies and Sessions?

As mentioned earlier, scraping based on cookies and sessions is not the most reliable or recommended approach. However, for those with advanced technical knowledge, here’s a brief overview:

Cookie Scraping: This involves capturing cookies after a successful login and using them in your script to bypass the login form on subsequent scraping sessions. Techniques like browser extensions or custom scripts can be used for cookie extraction.

Session Hijacking (Not Recommended): This involves intercepting or manipulating session data to impersonate a legitimate user. This is a complex and unethical approach, and security measures on websites often render it ineffective.

It’s important to reiterate that scraping based on cookies and sessions is generally not recommended due to its technical complexity, ethical considerations, and potential unreliability.

What Are Cookies and Sessions?

Understanding cookies and sessions can be helpful for some scraping scenarios, although scraping based on these mechanisms is not always recommended due to its technical complexity and potential unreliability.

Cookies: These are small pieces of data stored on your local machine by websites you visit. They often contain information like login credentials, site preferences, and browsing history. In some cases, scraping cookies and using them in your script to bypass login forms might be possible. However, websites often implement additional security measures to prevent unauthorized cookie usage.

Sessions: Sessions are server-side processes that track user activity during a website visit. Session hijacking involves stealing or manipulating session data to impersonate a legitimate user. This technique is highly discouraged due to ethical and legal considerations. Furthermore, websites often update their session management protocols, rendering session hijacking unreliable for scraping purposes.

How to Solve Different Kinds of Authentication?

Websites use various authentication methods to protect their data. The way to scrape website with login depends on the specific method employed.

For basic authentication, username and password are sent directly in the request header. You can use the requests library’s auth parameter to pass your credentials as a tuple. Digest authentication offers increased security by encrypting credentials using a hash function. The HTTPDigestAuth class from requests.auth handles this method.

OAuth, a popular choice, allows third-party applications to access website data without requiring your password. It utilizes a token-based system, requiring application registration and an access token obtained from the website. The requests-oauthlib library simplifies handling OAuth authentication.

How to Address JavaScript-heavy Websites?

JavaScript can be a hurdle for scraping websites. Dynamically generated content might not be captured by traditional methods. Here’s how to tackle this:

Headless Browsers: Libraries like Selenium or Puppeteer allow you to control a headless browser (one without a graphical interface). This lets you simulate user actions like page loads, ensuring the website renders content fully before scraping.

Website APIs (if available): If a website offers a public API (Application Programming Interface), you can use it to request data in a structured format, eliminating the need for scraping entirely. However, not all websites have public APIs, and some require deciphering network traffic or reverse engineering API calls, which can be more complex.

Scraping websites with logins can be powerful, but remember: with great power comes great responsibility! Always check a website’s Terms of Service and respect their robots.txt file to ensure you’re scraping ethically.

This comprehensive guide has equipped you with various methods to scrape website with login, from user-friendly no-code tools to more technical approaches using Python.

Remember to choose the method that best suits your technical expertise and scraping needs. By understanding website authentication mechanisms and addressing challenges like JavaScript-heavy websites, you can effectively extract valuable data while adhering to ethical scraping practices.

Read more: