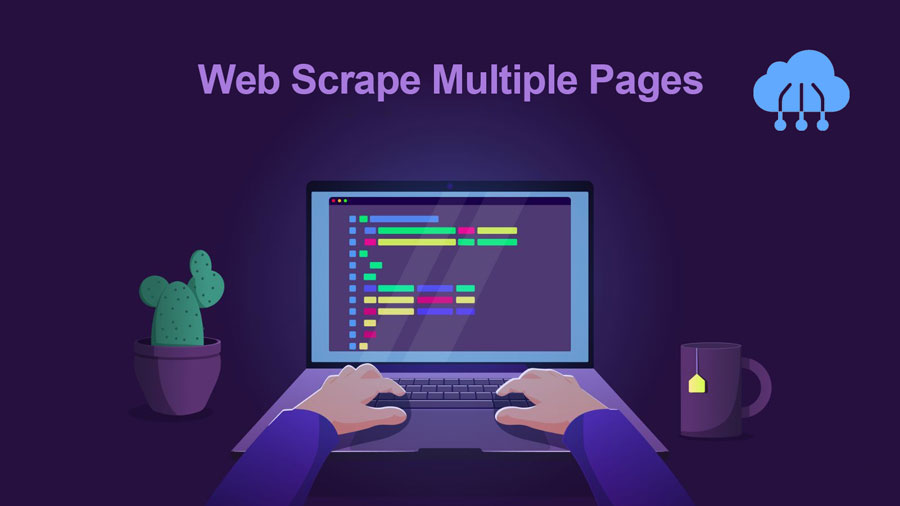

Ever wished you could compare prices from different websites at the same time? Or maybe automatically collect posts from your favorite blog? You can do all of that with Scrape Website Content.

Web scraping is like using a computer program to copy info and stuff from websites. For example, websites that compare prices use web scrapers to get prices from different online stores. Google also uses web scraping to find and list websites.

There are many other ways to use web scraping. In this article, we’ll learn more about web scrapers, use cases, and how to scrape website content. Keep reading to learn more and start scraping!

What Is Web Scraping?

Web scraping, also called web harvesting, is a way to automatically read and study information online. It involves three steps:

Mining data: This means finding the information source and getting the data out of it. You put the data into a special place where you can work with it.

Parsing data: This means reading the data and picking out the important parts, like looking for gold in a pile of dirt.

Outputting data: This means taking the useful data and putting it into a format that you can use for something else.

Web scraping usually focuses on HTML code, which is the basic building block of websites. Websites usually have a mix of HTML, CSS, and JavaScript code. A browser makes all this code look nice for us to read. If you right-click and inspect a webpage, you can see which parts of the page match up with which lines of code. This helps you know what to scrape website content.

Even though we’re focusing on HTML, you can actually scrape any kind of data.

How to Scrape Website Content with Python?

Here’s a simple example of how to scrape website content using the Python tools “requests” and “Beautiful Soup.” This scraper gets definitions from the WhatIs.com homepage.

Step 1: Get WhatIs.com in your code

This step shows how to get the website’s code using the “requests” tool. The code below gets the first 1,000 letters of WhatIs.com’s code. It’s not needed for the next step, but it shows how the tools get the data.

“Requests’ ‘ is a popular tool for making requests to websites. The line response.txt gives you the code from the website.

Step 2: Find the website addresses (URLs)

Links in HTML (the code of websites) look like this: <a href=”URL”>Clickable Text or Content</a>. WhatIs.com’s code has lots of them.

The code below finds all the links on the WhatIs.com homepage and shows you the URLs. It does this by finding all the “a” tags, which mean links, and then showing the URL of each link.

This will show you all the links on the page, even ones that aren’t definitions.

Step 3: Find the definition URLs

Look for a pattern in the URLs of the definitions. They all have “/definition” in them. The following code will only show URLs with “/definition”.

Step 4: Clean up the results

The last step mostly worked, but it still shows links to the WhatIs.com glossary, which all have the word “definitions” in them. To get rid of these and only show definition links, add this line to the code: if href and “/definition” in href and “/definitions” not in href:.

Now, all the links will be for TechTarget definitions, and the glossary is gone.

To save these links as a file, you can use the “pandas” tool to turn the links into a table, then save that table as a CSV file called “output.csv.”

Is It Illegal to Scrape Website Content?

Getting public information from websites is okay as long as you follow certain rules. Web scraping is not the same as stealing data. There’s no law against it in the US, and many businesses use it to make money. Some websites, like Reddit, even have tools that make it easier to scrape.

But, sometimes it is not allowed to scrape website content. For example, if you take data without asking or if it goes against the website’s rules, it can be illegal. This can lead to getting in trouble with the law.

Many social media sites try to limit access to their data to make it harder to scrape. LinkedIn is one example.

There was a legal fight between HiQ Labs and LinkedIn about this. HiQ scrape LinkedIn’s public profiles, and LinkedIn told them to stop. HiQ sued LinkedIn and won, because the data was public and LinkedIn’s actions seemed to be unfair to competition.

LinkedIn uses technology to find and warn people who are scraping their site.

There are other ways websites try to stop or avoid scraping:

- Rate limit requests: This means setting a limit on how often a computer program can visit a website. This makes it harder for scrapers, which can visit pages faster than humans.

- Change the website’s code: Scrapers look for patterns in the website’s code to find info. Changing these patterns makes it harder for them.

- Use CAPTCHAs: These are those tests that make you prove you’re a human, like clicking on pictures of cars or typing in letters. Many scrapers can’t do this.

Many websites also have a special file called robots.txt. It’s like a set of rules for robots that tells them what they can and can’t do on the website. It lists things like what pages are okay to look at, what pages are not allowed, and how long robots should wait between visits.

You can usually find the robots.txt file at the main address of the website. Bloomberg’s robots.txt file is a good example of this.

Scrape Website Content Use Cases

Web scraping is now an essential tool for many businesses. It’s used for keeping an eye on competitors, comparing prices in real-time, and doing valuable research on the market. Let’s take a look at some common uses.

Market Research

What are your customers up to? What about the people you want to be your customers? How do your competitors’ prices stack up against yours? Do you have the info you need for a good marketing campaign?

These questions are really important for understanding your market, and you can scrape website content to get answers to all of them. Most of this info is already out there for anyone to see, so web scraping is a very useful tool for marketing teams who want to stay on top of things without wasting time doing research by hand.

Business Automation

The good things about web scraping for market research can also be used for business automation.

Many business automation tasks need lots of data, and web scraping is super helpful for that, especially if getting the data manually would be a pain.

For example, you need to get data from ten different websites. Even if you’re looking for the same type of data on each website, each website might need a different way to get it. Instead of going through each website by hand, you could use a web scraper to scrape website content automatically.

Lead Generation

As if market research and business automation weren’t enough, scraping website content can also easily create lists of potential customers.

While you need to be specific about who you’re looking for, you can use web scraping to gather enough info on people to make organized lists of leads. The results might vary, of course, but it’s much easier (and more likely to work) than making the lists yourself.

Tracking Price

Getting prices from websites is a common way to scrape content from websites.

One example is the Camelcamelcamel app, which tracks prices on Amazon. It regularly checks product prices and shows how they change over time on a graph.

Prices can change a lot, even every day (see the big drop on May 9th!). By looking at how prices have changed in the past, users can see if they’re getting a good deal. In this example, they might wait a week to save $10.

But, price scraping can be a problem. Some apps want to update prices so quickly that they overload websites with too many requests.

Because of this, many online stores have started taking extra steps to block web scrapers. We’ll talk more about that in the next section.

News & Content

Knowing what’s going on is super important. Scrape Website Content is a great way to do this, whether it’s checking on your reputation or seeing what’s happening in your industry.

While some news sites and blogs have easy ways to get their content, like RSS feeds, not all of them do. So, if you want to gather the exact news and info you need, you’ll probably need to use web scraping.

Following Brand

While you’re checking the news, why not see what people are saying about your brand? If your brand gets talked about a lot, web scraping is a great way to stay updated without having to read through tons of articles and news sites.

When you scrape website content, it can also help you see the lowest price your product or service is being sold for online. This is technically a type of price scraping, but it’s important information that can help you see if your prices match what customers expect.

Read more: