Are you looking for a way to easily extract data from your website and store it directly into Google Sheets? Web scraping, although useful, often requires you to have programming knowledge. But there’s good news for you: Google Sheets has a user-friendly solution that completely eliminates the need for programming knowledge! But, do you know How to Scrape Data from a Website into Google Sheets?

Reading this article will help you learn how to extract website data directly into Google Sheets. We’ll learn how to use the built-in Google Sheets capabilities to easily collect information from websites. From there, you’ll be equipped to unlock the potential of web scraping for your jobs or projects without having to write a single line of code.

What Formulas Can be Used for Google Sheets Scrape Websites?

Web scraping can be a powerful tool, but traditional methods often require technical expertise, expensive software, and complex coding. Thankfully, Google Sheets offers a user-friendly alternative.

Google Sheets provides a variety of ways to scrape data based on your needs:

Built-in Formulas: Google Sheets offers four core formulas for web scraping:

- IMPORTFEED: This formula retrieves data from RSS feeds, perfect for keeping tabs on updates from your favorite websites.

- IMPORTHTML: This versatile formula tackles lists and tables, allowing you to extract structured data from webpages.

- IMPORTDATA: Need to import any kind of structured data? This formula is your go-to for scraping a variety of data formats.

- IMPORTXML: This formula empowers you to utilize XPath queries for extracting any type of data from a webpage.

Custom Scripts (Advanced): For users with programming experience, Google Sheets allows writing custom scripts in Google Apps Script (GS). Similar to JavaScript, GS offers more flexibility for users who want to tailor their scraping needs through code.

Why Is Google Sheets a Great Tool for Scraping Websites?

While powerful web scraping tools exist, Google Sheets offers a surprisingly effective and accessible alternative. Here’s why it stands out:

- User-Friendly Interface: If you’re comfortable with spreadsheets, you’re good to go! Google Sheets eliminates the intimidation factor often associated with web scraping tools.

- No Coding Required: Leave the complex programming languages behind. Google Sheets utilizes built-in formulas that make data extraction a snap, even for beginners.

Accessibility Anywhere: The cloud-based nature of Google Sheets allows you to scrape data from any device with an internet connection – ultimate flexibility! - Free and Cost-Effective: For individuals and small businesses, Google Sheets offers a budget-friendly solution for web scraping needs.

- Seamless Integration: As part of the Google Suite, Google Sheets integrates effortlessly with other Suite tools, streamlining your workflow further.

- Automation Power: Take your web scraping efficiency to the next level! Google Sheets allows you to automate repetitive tasks using macros or scripts, freeing up your time for analysis.

- Built-in Analysis Tools: Once you’ve scraped your data, Google Sheets shines again. Utilize its powerful formulas to analyze and gain valuable insights from your extracted information.

What Is IMPORTXML?

Google Sheets’ IMPORTXML function is your one-stop shop for grabbing data from various sources. It’s a versatile tool that can handle not just XML documents, but also HTML web pages. Looking to import data in other structured formats?

IMPORTXML has you covered! It can also work with Comma Separated Values (CSV) files, Tab Separated Values (TSV) files, Really Simple Syndication (RSS) feeds, and even Atom XML feeds. This broadens your options for pulling data into your spreadsheets, giving you more control over your data analysis.

How Can IMPORTXML Work?

The IMPORTXML function unlocks the power to extract data directly from webpages into your spreadsheets. It needs two necessary parts:

- Website URL: This tells IMPORTXML where to find the data you want.

- XPath Query: This acts like a treasure map, pinpointing the specific data you need on the webpage.

Here’s an example: imagine you want to grab the title of this very website (https://quotes.toscrape.com/). The formula would be:

=IMPORTXML(“https://quotes.toscrape.com/”, “//title”)

As you can see, the URL goes first, followed by the XPath query (//title) within quotation marks.

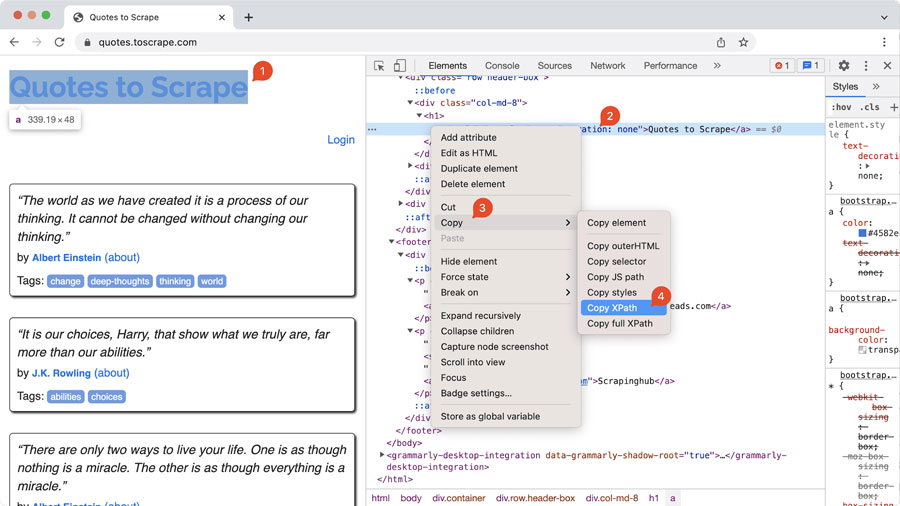

Feeling lost with XPath? Here’s a handy trick:

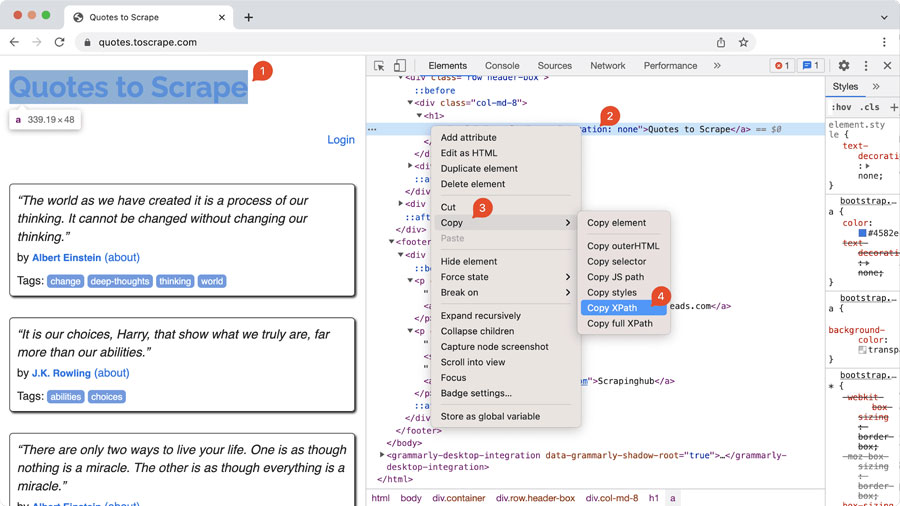

- Open the webpage in your browser.

- Right-click the element you want to extract (like the title) and choose “Inspect.”

In the developer tools, right-click the element’s highlighted HTML and select “Copy” -> “Copy XPath.”

This copies the exact XPath for that element, making it easy to use in your IMPORTXML formula.

Let’s say you want the first quote from that same website. We can use a slightly more complex XPath:

=IMPORTXML(“https://quotes.toscrape.com/”, “(//*[@class=’text’]/text())[1]”)

This retrieves the first element with the class “text” (which likely holds the quote) within the webpage.

If XPaths seem intimidating, don’t fret! We have a dedicated blog section explaining how to write them like a pro.

For maximum control, you can store the URL in cell A1 and the XPath in cell A2. Your formula can then reference these cells:

=IMPORTXML(A1, A2)

This approach keeps your formulas clean and organized.

What Is XPath?

IMPORTXML relies on XPath, a special language for navigating web pages like a map. This crash course equips you with the basics to get started:

- Finding Elements: Imagine the webpage structure as a tree. The forward slash (/) separates elements, helping you pinpoint the data you need. For example, /html/body/h1 locates the first h1 element within the body section of the webpage.

- Matching All Elements: Want every h1 element, not just the first? Use double forward slashes (//). This acts like a wildcard, searching for all matching elements anywhere in the document (e.g., //h1).

- Extracting Text: The text() function grabs the actual text content of an element. //h1/text() retrieves the text within all h1 elements.

- Extracting Attributes: Attributes provide additional information about elements. The @ symbol helps you access them. For instance, //h1/@class extracts the value of the class attribute for all h1 elements.

- Filtering with Square Brackets: Square brackets ([]) help refine your search. //h1[@class=”pdp_orange”] targets only h1 elements with the specific class “pdp_orange”.

With this basic understanding, you can start using IMPORTXML to extract data from webpages, unlocking a world of automation possibilities in your spreadsheets!

How to Scrape Data from a Website into Google Sheets

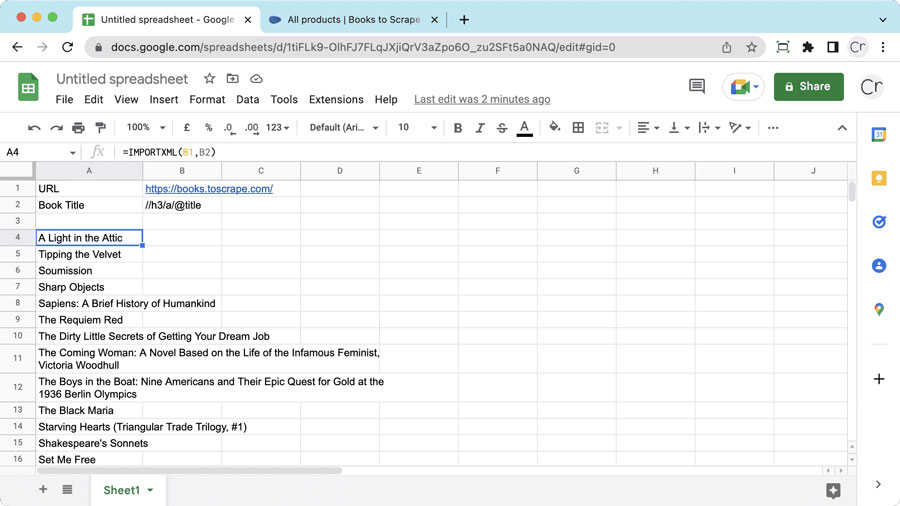

Here’s how to scrape data from a website into Google Sheets, without any add-ons:

1. Find the Magic Key (XPath):

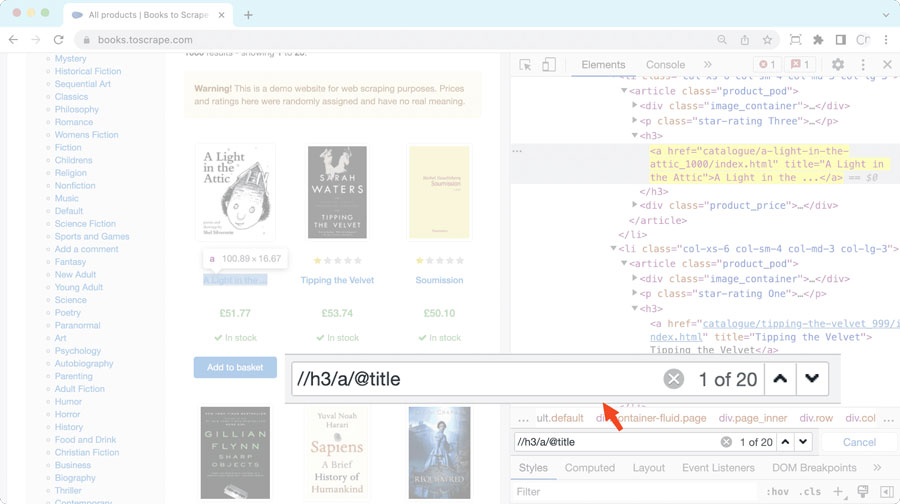

- Open the target website (e.g., https://books.toscrape.com/) in Chrome.

- Right-click the element you want to extract (like a book title) and select “Inspect.”

- In the developer tools window, find the element’s HTML code. Right-click it, choose “Copy” -> “Copy XPath.” This grabs the unique identifier (XPath) for that element.

- Want all elements of a type? Use // instead of a single forward slash (/). For example, //h3 selects all h3 elements.

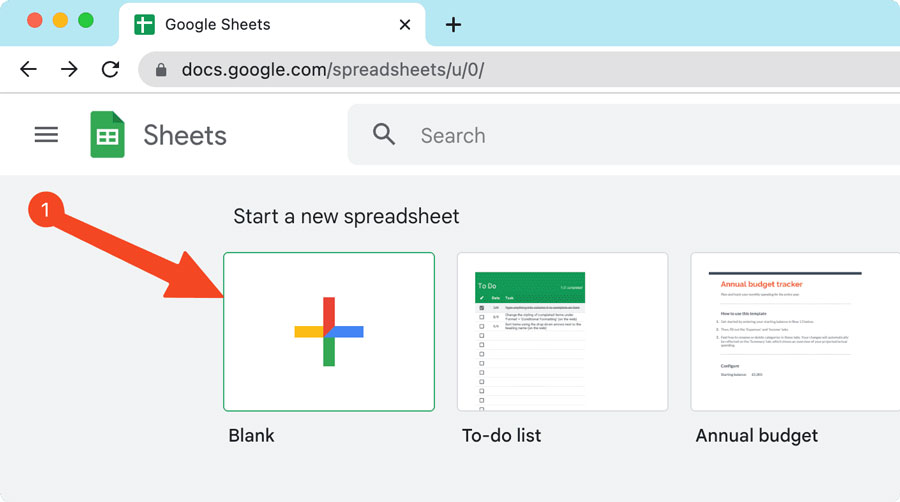

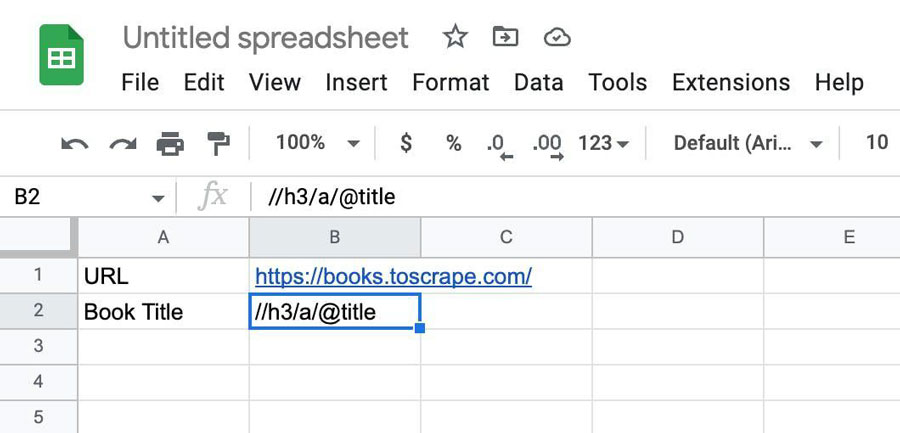

2. Prep Your Google Sheet:

- Create a new sheet in Google Sheets.

- Enter the website URL in one cell and the copied XPath in another. This keeps your formula flexible and easy to maintain.

3. Unleash the IMPORTXML Power:

- In a new cell (e.g., A4), enter the following formula, replacing B1 and B2 with the actual cell references where you stored the URL and XPath:

=IMPORTXML(B1, B2)

- Press Enter. Google Sheets will fetch the data based on your XPath. Here, it will extract all book titles.

Extracting More Data:

- To grab additional information, repeat steps 1 and 2 to find the XPath for the new data (e.g., book price might use //*[@class=”price_color”]/text()).

- Enter the new XPath in a separate cell (e.g., B3).

- Create a new formula referencing the URL and new XPath cell (e.g., =IMPORTXML(B1, B3)) in another cell (e.g., B4).

Read more: How To Scrape Data From A Website

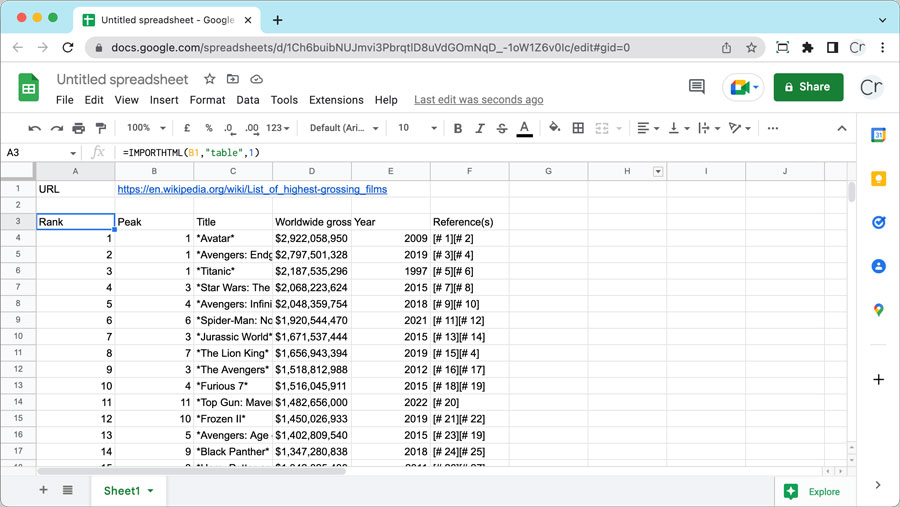

How to Bring Table from a Website in Google Sheets

Looking to scrape data from website tables into your Google Sheets? Look no further than the IMPORTHTML function!

Here’s a breakdown of how it works:

What it Needs:

- URL: The complete web address (including “http://”) of the page you want to scrape.

- Type: Specify “table” or “list” depending on where your data resides.

- Index: This tells IMPORTHTML which table (or list) to grab. Remember indexing starts from 1, and separate indexes exist for tables and lists.

Let’s extract data from the Wikipedia page listing highest-grossing films: https://en.wikipedia.org/wiki/Lists_of_highest-grossing_films.

- Create a New Sheet: Start by creating a new sheet in Google Sheets.

- Enter the URL: In a cell (e.g., B1), paste the target webpage URL.

- Extract the Entire Table: Use the following formula in another cell (e.g., A3) to import the entire first table:

=IMPORTHTML(B1,”table”,1)

Hit Enter, and voila! You’ll see the entire table imported into your spreadsheet.

Want only specific data, like movie titles (usually in column 3)? Use the INDEX function alongside IMPORTHTML:

=INDEX(IMPORTHTML(“https://en.wikipedia.org/wiki/List_of_highest_grossing_films”,”table”,1),,3)

Here, we’ve omitted the optional “row” parameter since we want all rows.

IMPORTHTML is a powerful tool for scraping tables, but there’s more! We’ll explore other helpful functions like IMPORTFEED in future sections.

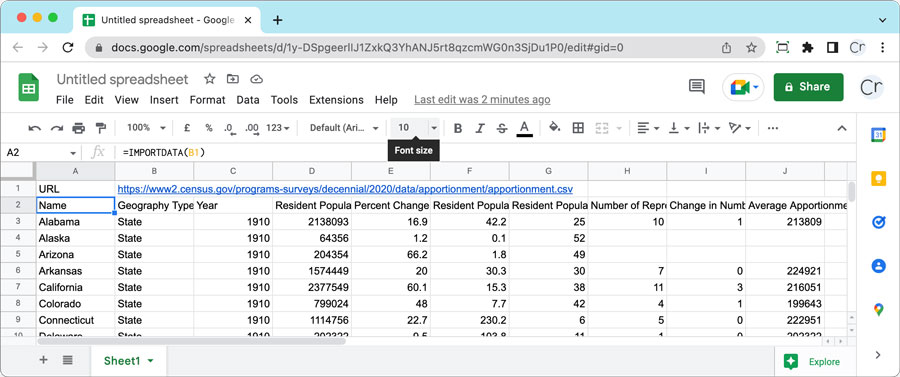

How to Extract Data from CSV to Google Sheets

Need to grab data from a website stored in a CSV file? Google Sheets has you covered! The IMPORTDATA function makes it a breeze.

Here’s how it works:

- New Sheet: Start by creating a new sheet in Google Sheets.

- CSV File URL: Enter the complete URL of the website containing the CSV file in a cell (e.g., B1). For example, let’s use the US Census apportionment data:

- https://www2.census.gov/programs-surveys/decennial/2020/data/apportionment/apportionment.csv

- IMPORTDATA Formula: In another cell (e.g., A2), enter the following formula:

=IMPORTDATA(B1)

Hit Enter, and IMPORTDATA will fetch the data from the CSV file directly into your spreadsheet.

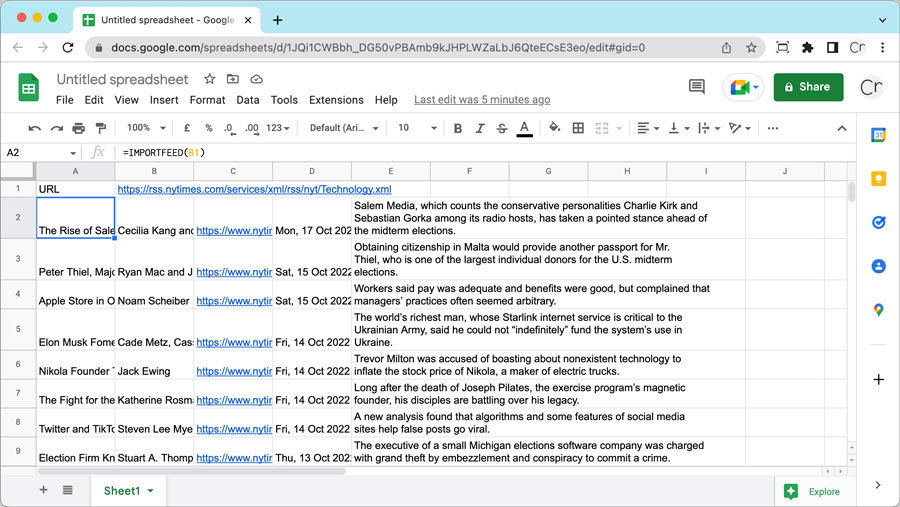

How to Scrape Data from XML Feeds to Google Sheets

RSS and Atom are popular formats for website feeds, but scraping them with IMPORTXML can be tricky due to XPath queries. Here’s where IMPORTFEED comes in – a built-in hero for fetching data from feeds!

Unlike IMPORTXML, IMPORTFEED only requires one argument: the URL of the feed. Simply provide the URL, and IMPORTFEED takes care of the rest, delivering the data directly to your Google Sheet.

Let’s grab tech news headlines from the New York Times:

- New Sheet: Create a new sheet in Google Sheets.

- Feed URL: Enter the feed URL (e.g., https://www.nytimes.com/rss) in cell B1.

- Magic Formula: In cell A2, enter the following formula:

=IMPORTFEED(B1)

Press Enter, and boom! You’ll see the latest tech headlines from the New York Times populated in your sheet.

What Are Benefits and Drawbacks of Google Sheets Web Scraper?

Google Sheets import functions like IMPORTXML, IMPORTHTML, and IMPORTDATA offer a convenient way to grab data from websites without writing code or installing add-ons. Here’s a breakdown of their pros and cons:

Pros:

- No Coding Needed: These functions are user-friendly, eliminating the need for complex coding knowledge.

- Built-in Web Scraping: Extract data directly within Google Sheets, streamlining data collection.

- Dynamic Imports: Use formulas like any other Google Sheets formula, allowing them to reference other cells for flexibility.

Cons:

- Scalability Limits: Google Sheets isn’t ideal for handling massive datasets (millions of records).

- Static Headers: You can’t customize headers. Standard Google headers, including user-agent, might get blocked by some websites.

- Limited Functionality: Complex tasks like sending POST requests or using proxies are not supported.

- Advanced Limitations: For truly advanced web scraping scenarios, programming or professional solutions like Large-scale Web Data Acquisition might be necessary.

FAQs

Can You Scrape Website Data into Google Sheets?

Google Sheets makes it easy to pull information directly from websites. It has built-in tools like IMPORTHTML, IMPORTXML, IMPORTDATA, and IMPORTFEED to help you. These functions are great for simple tasks, but for more complex websites or scraping projects, you might need a specialized web scraping tool or to write your own code.

Can the Scraped Data from Websites Stay Fresh?

Leaving your Google sheet active allows these functions to search for new data updates every hour. Additionally, data will be renewed if you remove and then reinsert the same cell. It’s important to note, however, that refreshing your sheet or duplicating a cell with these functions through copy-paste does not trigger a data update.

Conclusion

Google Sheets offers a surprisingly powerful tool for web scraping – collecting data directly from websites. No coding required! This makes it perfect for anyone who needs to gather information online.

However, you don’t know how to scrape data from a website into Google Sheets. Well, get ready to transform your spreadsheets! Google Sheets offers built-in functions like IMPORTHTML, IMPORTXML, and IMPORTDATA that act like data magnets, grabbing information from websites and CSV/TSV files.

In just a few minutes, you can set up a “scraper” to automatically pull specific data into your spreadsheet. This opens doors for various uses, from market research to creating personalized reports.

Read more: