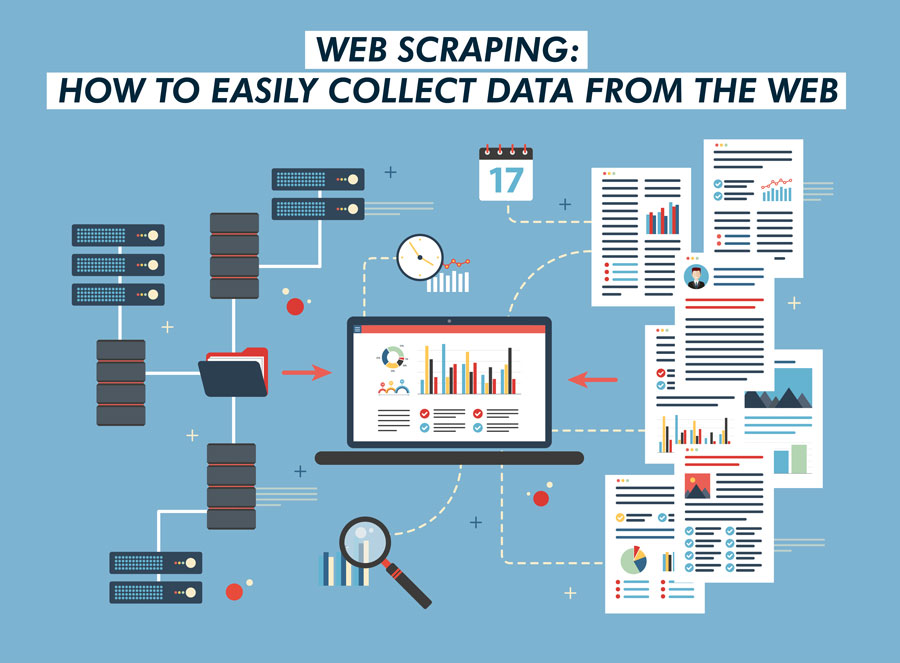

Have you ever wished you could extract valuable insights directly from websites? Structured data, the backbone of those insights, is gold for businesses across industries. It fuels predictions, trend identification, and targeted audience shaping.

That’s what gave rise to web scraping – an increasingly popular method of retrieving structured data. But, do you know how to scrape data from a website?

This article will discuss the basics of web scraping: what it is, which websites are suitable for it, how it works, and best of all, you don’t need coding skills. Read this blog to learn how to scrape information from a website quickly and to suit your needs.

What Is Web Scraping?

Scrape data from a website, also known as web data extraction, is like auto-copy-paste on steroids. It automatically grabs data, structured or not, from websites. This data can be anything from product prices to news articles.

Web scraping is your super-powered research assistant. It will help you gather and organize all the information publicly available on the internet. Businesses use this smart tool to track competitors’ prices, stay up to date with the latest industry news, and even find new customers.

Web scraping is like copying and pasting information on steroids. Instead of doing it all by hand, web scraping tools use clever tricks (and sometimes a bit of machine learning) to collect large amounts of data in just a few seconds. This allows you to process millions of website listings quickly and accurately.

In particular, you don’t need to be a technology expert to scan the web. You can learn how to do it or find services that can help you with the heavy lifting. Either way, knowing how it works is extremely useful – it could change the way you think about information online.

What is a Website Scraping Software?

Ever grabbed a bunch of info from a website and put it into a spreadsheet? That’s web scraping in a nutshell! Web scraping tools are like digital assistants that pull specific data from websites.

These tools work by visiting a website and sifting through the publicly available information, the kind you see as text and images on your screen. They can also, in some cases, access data stored behind the scenes through special tools called APIs.

The web scraping offers a diverse range of tools, each tool boasts unique strengths to tackle your data extraction needs. Some are simple to use, while others offer more advanced features for complex projects.

This rewrite uses a more informal tone and avoids technical jargon. It also clarifies the purpose of web scraping tools and how they work.

Ways to Scrape a Website

There exist numerous methodologies to extract data from websites, each requiring varying levels of coding proficiency.

Among the non-coding methods, we can explore the following options:

- Manual copy and paste: The most straightforward approach involves manually copying the desired data from its source on the website and analyzing it subsequently.

- Browser developer tools: Browsers offer a plethora of built-in tools that allow for inspecting and extracting website elements. An example of such a tool is the inspect function, which reveals the underlying source code of a webpage.

- Browser extensions: Additional functionalities can be added to browsers through extensions, enabling pattern-based web scraping.

- RSS feeds: Certain websites provide structured lists of data in the form of RSS feeds.

- Web scraping services: Platforms like Diffbot, Octoparse, Import.io, and ParseHub offer no-code solutions for web scraping.

- Data mining software: Comprehensive software like KNIME and RapidMiner provides a range of data science and analytics capabilities, including web scraping.

On the other hand, coding is involved in the following methods:

- Beautiful Soup: Python’s Beautiful Soup library is an excellent resource for those new to scraping, as it requires only a minimal amount of coding knowledge and is ideal for one-off HTML scraping projects.

- APIs: Many websites offer structured APIs that allow users to scrape data form a website. Utilizing APIs usually necessitates a basic understanding of data formats such as JSON and XML, as well as a rudimentary comprehension of HTTP requests.

More advanced coding knowledge is required for the following scraping techniques:

- Scrapy: Python’s Scrapy library caters to more complex web scraping tasks. Although Scrapy offers extensive functionality, it can be challenging for newcomers.

- JavaScript: Leveraging the Axios library for HTTP requests and the Cheerio library for HTML parsing in Node.js allows for effective scraping.

- Headless browsers: Automation tools like Selenium or Puppeteer can manipulate browsers using scripts and handle websites with substantial JavaScript content.

- Web crawling frameworks: Advanced frameworks such as Apache Nutch facilitate large-scale web scraping endeavors.

Are There Limitations When You Scrape Information from a Website?

Not all websites are cool with scraping. Some have rules against it, while others might let you take some data, but not everything. It’s kind of like being a guest at a party – gotta follow the host’s guidelines!

How to check a website’s scraping policy? Look for a file called “robots.txt” (all lowercase). Just add “/robots.txt” to the website’s address. For instance, to see if IMDb allows scraping, check https://imdb.com/robots.txt.

What’s the robots.txt file about? It tells search engines (and sometimes you!) which parts of the website are off-limits for crawling. This helps avoid overwhelming the website with too many requests at once. Imagine a bunch of people showing up at your door at the same time – website servers don’t like that either!

So, what about IMDb? Their robots.txt file says “no scraping” for certain areas like movie listings and TV schedules.

Web scraping isn’t illegal, but there are guidelines. Always check the robots.txt file before scraping to avoid getting blocked or worse.

Is Scraping Data from a Website Legal?

Grabbing public data from websites is generally okay, as long as you play by the rules. It’s not stealing data, and many businesses use it perfectly legally. Some platforms, like Reddit (although not for free anymore!), even offer APIs to make scraping easier.

But there are limits! Scraping becomes illegal when you take data without permission, especially if it goes against a website’s terms of service. Laws like the Computer Fraud and Abuse Act, data protection regulations (like GDPR), and copyright laws can come into play here, leading to trouble.

Not all platforms welcome scrapers with open arms. Social media sites like LinkedIn often try to make scraping difficult. Remember the HiQ vs. LinkedIn case in 2017? HiQ, a data company, sued LinkedIn after being told to stop scraping public profiles. The court sided with HiQ initially, highlighting that the data was public and LinkedIn’s actions might be anti-competitive.

So, how do platforms fight back against scrapers? Here are a few tricks:

- Rate limiting: This puts a cap on how many requests an IP address can make in a set time, making it harder for automated scrapers to overwhelm the website.

- HTML shuffle: Regularly changing the website’s HTML structure throws scrapers off track. Imagine a scraper looking for a specific pattern in a messy room – not easy to find what you need!

- CAPTCHAs: These “are you human?” tests can trip up scrapers, forcing them to jump through hoops that only real people can solve.

What Are Differences Between Website Scraping and Web Crawling?

Understanding the difference between a web crawler and a scraper is key. Crawlers explore websites, finding all the relevant URLs. Scrapers then take over, using those URLs to pinpoint and extract the specific data you’re after. Think of them as a tag team – crawlers find the location, and scrapers grab the treasure!

Website Scraping

Web scrapers are like data vacuum cleaners! They suck up specific information from websites, quickly and precisely. There are many different Scrape data from a website tools available, each suited to different scraping tasks, some simple and some more complex.

They use special instructions called “selectors” that tell them where to look in the website’s code (the HTML file). These selectors can be like precise addresses, pointing to exactly the data you want. There are a few common types of selectors, like XPath, CSS selectors, and regular expressions.

Website Crawling

Website crawlers are like busy researchers, constantly searching and indexing the vast web.

In web scraping projects, crawlers often come first. They act like scouts, venturing out to find all the relevant URLs on a website (or even the entire web!). These URLs are then passed on to the scraper, which extracts the specific data you’re interested in.

What Are Use Cases of Website Scraping?

Let’s explore website scraping use cases that you should know.

Price Intelligence

Web scraping for price intelligence is like having a secret weapon in the e-commerce game!

Imagine knowing exactly what your competitors are charging, and how product trends are shifting. That’s the power of price intelligence. By scraping product and pricing info from e-commerce sites, you can turn raw data into gold.

Here’s how web scraping fuels smarter e-commerce decisions:

- Dynamic Pricing: Scraped data lets you adjust prices automatically to stay competitive and maximize profits.

- Revenue Optimization: See what sells and at what price to squeeze the most revenue from your products.

- Competitor Monitoring: Keep a pulse on your rivals’ pricing strategies and stay one step ahead.

- Product Trend Monitoring: Identify hot and cold sellers so you can stock up on the right items.

- Brand and MAP Compliance: Ensure you’re following Minimum Advertised Price guidelines set by manufacturers.

Market Research

As all operations, strategies and plans today require data. In other words, accurate market research becomes a requisite for success. Web scraping provides a great tool for collecting high-quality, high-volume data that helps you make informed decisions.

Here’s how web scraping can fuel your market research:

- Uncover hidden trends: Identify emerging markets, new consumer preferences and trends by collecting relevant data points across the web.

- Monitor competitor prices: Update real-time information about competitors’ pricing strategies in detail. This allows you to time your own pricing to achieve the greatest benefit.

- Find the perfect entry point: Collect and process data quickly to help you identify the most suitable market and customer segment for your product or service.

Drive R&D: Collect valuable data that will inform product development and ensure you are creating products and services that satisfy real needs. - Stay ahead of your competitors: Collecting relevant data will help you continuously monitor your competitors’ activities. This allows you to adapt and stay one step ahead.

Finance

In today’s information age, informed decision-making is key. That’s why leading firms leverage the power of web-scraped data for its unmatched strategic value.

Our solutions include:

- Unearth hidden insights from SEC filings.

- Accurately estimate company fundamentals.

- Gauge public sentiment and market trends.

- Monitor real-time news for informed investment strategies.

Real Estate

The real estate market is changing rapidly. New online players are emerging, and the way we work needs to adapt. But fear not! Web scraping can be your secret weapon to stay competitive and make smarter decisions.

By incorporating web-scraped data into your daily operations, you can:

- Become a Pricing Pro: Accurately appraise property values by leveraging real-time market data. No more guesswork, just confident pricing strategies.

- Fill Vacancies Faster: Track vacancy rates in different areas and identify neighborhoods with high demand. Target your marketing efforts for quicker rentals.

- Maximize Rental Income: Use data to estimate rental yields on potential properties. Invest in properties with the highest earning potential.

- Predict market trends: Capture and understand market trends through real-time data on listings, sales and rentals. This allows you to anticipate changes and adjust your strategy accordingly.

Content Monitoring

A short news story can make or break the reputation of an individual or organization. Whether you are a business leader or an employee tasked with staying informed, web scraping news data becomes the decisive weapon for your success.

Stay in Control of Your Narrative:

- Track Industry Trends: Monitor relevant news stories to understand what’s happening in your field and identify potential opportunities.

- Monitor public perception of your business: Analyze online sentiment to gauge public perception and attitudes about your brand and quickly find ways to address any concerns.

- Track your competitors: Track your competitors’ activities, strategies and business situation to stay one step ahead.

- Navigating the Political Landscape: One of the factors that has a major impact on some businesses is changes in politics (depending on the industry).

- Make fast, data-driven decisions: Get valuable insights from news data to help you and your business make the best and fastest strategies and decisions.

Brand Monitoring

In today’s market, customer sentiment reigns supreme. Web scraping brand monitoring allows you to:

- Track online reviews and social media conversations to understand how customers feel about your products.

- Respond quickly and effectively to negative feedback, fostering trust and loyalty.

MAP Monitoring

Don’t let unauthorized price drops damage your brand. Web scraping for MAP monitoring allows you to:

- Proactively identify and address MAP violations across online marketplaces.

- Protect your brand image by ensuring consistent pricing for your products.

Business Automation

Don’t waste time wrestling with internal data access. Web scraping provides a faster and more efficient way to:

- Extract data from your own or partner websites in a structured format.

- Bypass cumbersome internal systems and get the data you need quickly.

Lead Generation

Generating leads is a prerequisite for the success and survival of any business, but it is also a huge challenge. In 2020, HubSpot reported that more than 60% of marketers struggled to generate leads.

Web scraping can be a perfect tool to help you find potential customers faster and easier.

How Web Scraping Can Help You Generate More Leads:

- Target the Right Audience: Identify potential customers who fit your ideal profile by scraping data from relevant websites and directories.

- Save Time and Resources: Stop wasting time on manual lead generation. Web scraping can automate the process of finding and collecting contact information.

- Build a Targeted Lead List: Scrape data from a website to create a comprehensive list of potential leads for your sales team to follow up with.

- Increase Conversion Rates: By focusing on qualified leads, you’ll improve your chances of converting them into paying customers.

How to Scrape Data from a Website with RPA Cloud

RPA Cloud takes the complexity out of web scraping, allowing you to gather valuable data from the web without needing any coding experience.

Here’s how to scrape data from a website with RPA Cloud:

- Scrape Any Website: Extract data from any website, even those with infinite scrolling or requiring logins.

- Effortless Data Extraction: Point and click to select the information you need. No programming language required!

- Structured and Organized Data: RPA Cloud automatically organizes the extracted data in a clear, usable format for easy analysis.

RPA CLOUD is perfect for businesses of all sizes. Whether you’re a small business owner or a large enterprise, you can leverage the power of web scraping to gain a competitive edge.

Read more: